GenAI LLM workstation

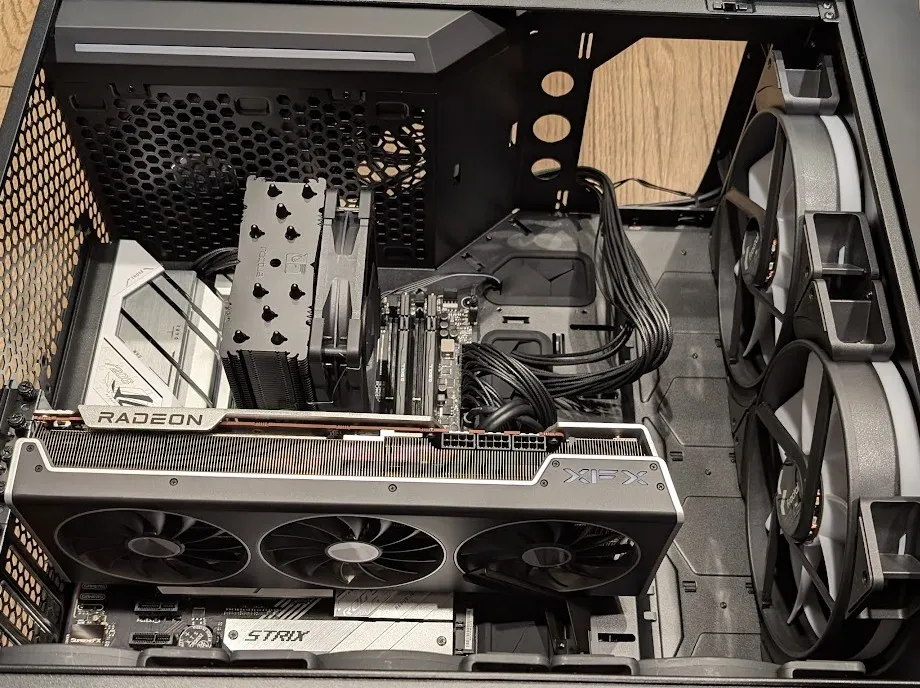

I decided to build a new workstation that could run GenAI models at home and serve them on my local network! Despite already having access to Claude models through the Anthropic API and OpenRouter for many other models, I wanted something that I could experiment with, running Huggingface models, and run smaller tasks at home locally. This build was made for LLM inference and centered around the Radeon 7900 XTX with 24GB of VRAM that nicely fits mid-tier parameter models (~30B).

I’ve tested vLLM and Ollama for the back-end with Open WebUI for the front-end, and KoboldAI. Ollama was a faster start but many posts on the internet claim that vLLM can eek out a bit more performance if you’re willing to play with the settings. As I’m using a Radeon card, ROCm was used instead of Nvidia’s CUDA platform, which is fine as this build will largely be used for inference. Open WebUI can be exposed within my local network to integrate with other tools, like Visual Studio Code’s Continue extension as a coding assistant.

- CPU: AMD Ryzen 7 7800X3D

- GPU: XFX Speedster Merc 310 Radeon 7900 XTX

- Motherboard: ROG Strix B650-A

- RAM: 2xG.SKILL 16GB 6000 Mhz

- CPU cooler: Notcua NH-U12S

- Power supply: ASUS TUF Gaming 1200W

- Computer case: Fractal Design Torrent RGB Black E-ATX

The graphics card takes up quite a bit of space as expected, but the Fractal Torrent case was large enough to accomadate without difficulty during the setup. The motherboard and case include enough space to connect another graphics card if needed in the future.

Radeon’s offical ROCm installation guide was used to install the main driver for the host OS, in my case, Linux Mint. Docker containers were used for vLLM, Ollama, Open WebUI and KoboldAI. YellowRoseCx’s Github has a quickstart Docker build that can get things up and running fairly easily without much tinkering with Docker Compose.

I didn’t originally intend for the build to have RGB but the price difference between non-RGB components wasn’t far off and I figured it would make it look a bit cooler if it lit up the room a bit!

Huggingface and Ollama websites are used to grab the quantized modelfiles.

Shout out to the LocalLLaMa Reddit community for the inspiration!